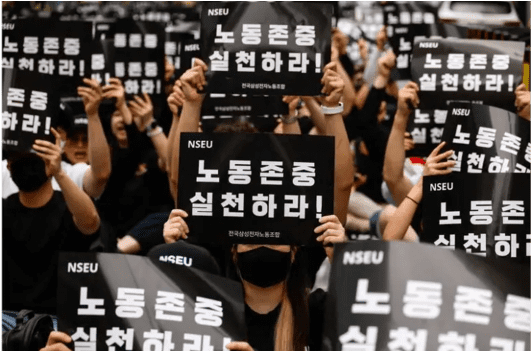

Samsung hit the biggest strike! Over 6,500 people attended.

More than 6,500 employees at South Korea's Samsung Electronics began a three-day mass strike on Monday (July 8), demanding an extra day of paid annual leave, higher pay raises and changes to the way performance bonuses are currently calculated. This is the largest organized strike in Samsung Electronics' more than half century of existence, and the union said that if this strike does not push employees' demands to be met, a new strike may be called. One of the core issues of the current dispute between the labor union and Samsung Electronics is raising wages and increasing the number of paid vacation days. The second demand is a pay rise. The union originally wanted a pay rise of more than 3% for its 855 employees, but last week they changed their demand to include all employees (rather than just 855). The third issue involves performance bonuses linked to Samsung's outsized profits - chip workers did not receive the bonuses last year when Samsung lost about Won15tn and, according to unions, fear they will still not get the money even if the company manages to turn around this year.

TSX futures rise ahead of Fed chair Powell's testimony

July 9 (Reuters) - Futures linked to Canada's main stock index rose on the back of metal prices on Tuesday, while investors awaited U.S. Federal Reserve Chair Jerome Powell's congressional testimony on monetary policy later in the day. The S&P/TSX 60 futures were up 0.25% by 06:28 a.m. ET (1028 GMT). The Toronto Stock Exchange's materials sector was set to re Oil futures , dipped as fears over supply disruption eased after Hurricane Beryl, which hit major refineries along with the U.S. Gulf Coast, caused minimal impact. Markets will be heavily focussed on Powell's two-day monetary policy testimony before the Senate Banking Committee, starting at 10 a.m. ET (1400 GMT), which can help investors gauge the Fed's rate-cut path. Following last week's softer jobs data, market participants are now pricing in a 77% chance of a rate cut by the U.S. central bank in September. The main macro event for the markets this week will be the U.S. consumer prices data due on Thursday, which can help assess the trajectory of inflation in the world' biggest economy. Wall Street futures were also up on Tuesday after the S&P 500 (.SPX), opens new tab and Nasdaq (.IXIC), opens new tab touched record closing highs in the previous session. In Canada, fears of the economy slipping into recession advanced after the latest data showed that the unemployment rate rose to a 29-month high in June. Traders are now pricing in a 65% chance of another cut by the Bank of Canada, which already trimmed interest rates last month. In corporate news, Cenovus Energy (CVE.TO), opens new tab said it is demobilizing some staff at its Sunrise oil sands project in northern Alberta as a precaution due to the evolving wildfire situation in the area.

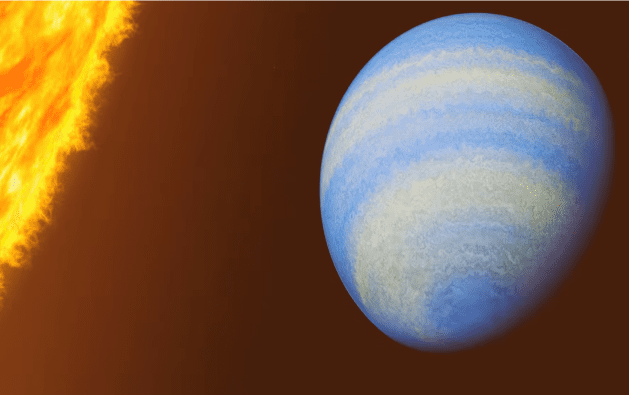

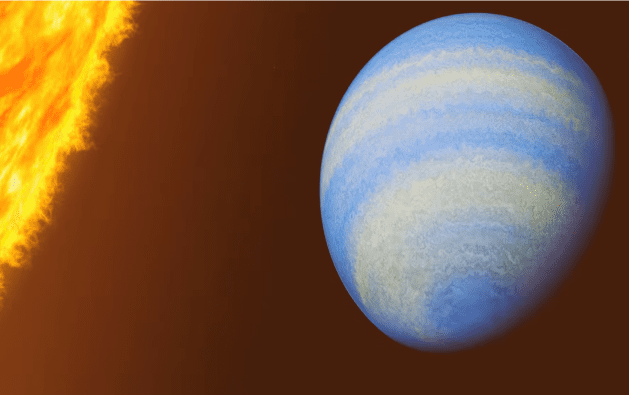

Rotten eggs chemical detected on Jupiter-like alien planet

WASHINGTON, July 8 (Reuters) - The planet known as HD 189733b, discovered in 2005, already had a reputation as a rather extreme place, a scorching hot gas giant a bit larger than Jupiter that is a striking cobalt blue color and has molten glass rain that blows sideways in its fierce atmospheric winds. So how can you top that? Add hydrogen sulfide, the chemical compound behind the stench of rotten eggs. Researchers said on Monday new data from the James Webb Space Telescope is giving a fuller picture of HD 189733b, already among the most thoroughly studied exoplanets, as planets beyond our solar system are called. A trace amount of hydrogen sulfide was detected in its atmosphere, a first for any exoplanet. "Yes, the stinky smell would certainly add to its already infamous reputation. This is not a planet we humans want to visit, but a valuable target for furthering our understanding of planetary science," said astrophysicist Guangwei Fu of Johns Hopkins University in Baltimore, lead author of the study published in the journal Nature, opens new tab. It is a type called a "hot Jupiter" - gas giants similar to the largest planet in our solar system, only much hotter owing to their close proximity to their host stars. This planet orbits 170 times closer to its host star than Jupiter does to the sun. It completes one orbit every two days as opposed to the 12 years Jupiter takes for one orbit of the sun. In fact, its orbit is 13 times nearer to its host star than our innermost planet Mercury is to the sun, leaving the temperature on the side of the planet facing the star at about 1,700 degrees Fahrenheit (930 degrees Celsius). "They are quite rare," Fu said of hot Jupiters. "About less than one in 100 star systems have them." This planet is located 64 light-years from Earth, considered in our neighborhood within the Milky Way galaxy, in the constellation Vulpecula. A light-year is the distance light travels in a year, 5.9 trillion miles (9.5 trillion km). "The close distance makes it bright and easy for detailed studies. For example, the hydrogen sulfide detection reported here would be much more challenging to make on other faraway planets," Fu said. The star it orbits is smaller and cooler than the sun, and only about a third as luminous. That star is part of a binary system, meaning it is gravitationally bound to another star. Webb, which became operational in 2022, observes a wider wavelength range than earlier space telescopes, allowing for more thorough examinations of exoplanet atmospheres.

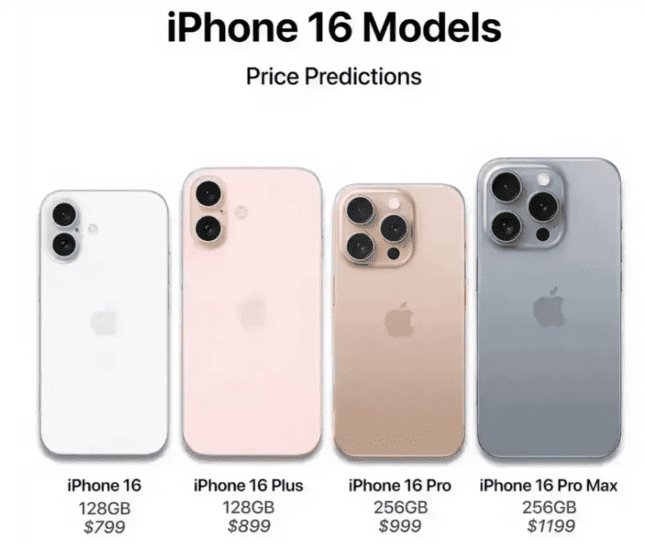

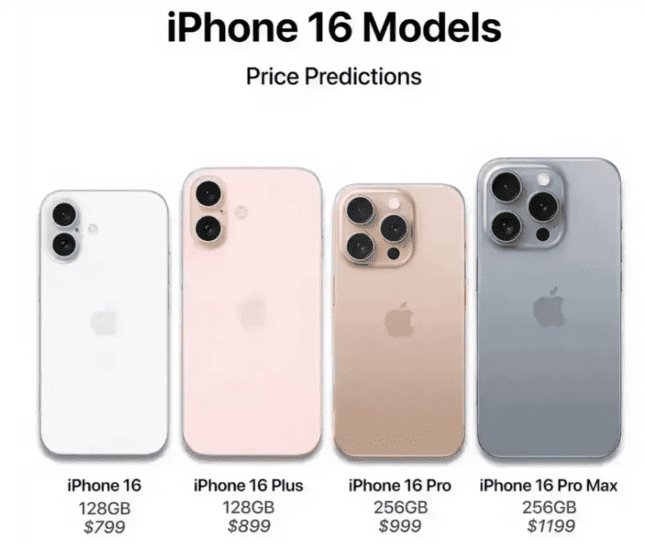

How to evaluate the product impact of the iPhone 16

At the Apple Developer Conference held earlier, the iPhone 16 series will be equipped with iOS 18 has been revealed. At the event, Apple showed off a series of convenient interactive experiences brought by Apple Intelligence, including a more powerful Siri voice assistant, Mail app that can generate complex responses, and Safari that aggregates web information. These upgrades will no doubt make the iPhone 16 line even more attractive. In order to use Apple Intelligence, a new feature of iOS 18, the iPhone 16 and 16 Pro series are equipped with A18 chips. An external blogger found in Apple's back end that the iPhone 16 series will use the same A-series chip, and the back end code mentions A new model unrelated to the existing iPhone. It includes four iPhone 16 series models, and the four identifiers all start with the same number, indicating that Apple is attributing them to the same platform. The new iPhone will have a stainless steel battery case, which will make it easier to remove the battery to meet EU market standards, while also allowing Apple to increase the density of the battery cell in line with safety regulations.

NHTSA opens recall query into about 94,000 Jeep Wrangler 4xe SUVs

July 9 (Reuters) - The National Highway Traffic Safety Administration (NHTSA) has opened a recall query into 94,275 Stellantis-owned (STLAM.MI), opens new tab Jeep SUVs over a loss of motive power, the U.S. auto safety regulator said on Tuesday. The investigation targets Jeep's Wrangler 4xe hybrid SUVs manufactured between 2021 through 2024. Chrysler had previously recalled, opens new tab the same model in 2022 to address concerns related to an engine shutdown. A recall query is an investigation opened by safety regulators when a remedy to solve an issue appears inadequate. The complaints noted in the new report include both failures in vehicles that received the recall remedy and those not covered by the prior recall, the NHTSA said.